Malaviya National Institute of Technology Jaipur

Introduction to Naive Bayes Classifier

What is Naive Bayes Classifier?

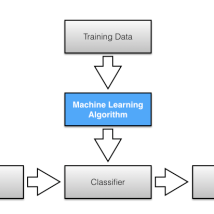

Naive Bayes is a family of algorithms based on applying Bayes’ theorem with a strong (naive) assumption, that every feature is independent of the others, in order to predict the category of a given sample. They are probabilistic classifiers, therefore will calculate the probability of each category using Bayes’ theorem, and the category with the highest probability will be the output. Naive Bayes classifiers have been successfully applied to many domains, particularly Natural Language Processing (NLP). They are also known for creating simple yet well-performing models, especially in the fields of document classification and disease prediction.

Applying Naive Bayes to NLP Problems:

This is a simple (naive) classification method based on Bayes’ rule. It relies on a very simple representation of the document (called the bag of words representation).

Imagine we have 2 classes (positive and negative) and our input is a text representing a review of a movie. We want to know whether the review was positive or negative. So, we may have a bag of positive words (e.g. love, amazing, hilarious, great, etc.) and a bag of negative words (e.g. hate, terrible, loss, pathetic, awful, etc.).

We may then count the number of times each of those words appears in the document, in order to classify the document as positive or negative.

This technique works well for topic ; say we have a set of academic papers, and we want to classify them into different topics (computer science, biology, mathematics).

For the difference between Naive Bayes & Multinomial Naive Bayes:

Naive Bayes is generic. Multinomial Naive Bayes is a specific instance of Naive Bayes where the P(Feature |Class) follows multinomial distribution (word counts, probabilities, etc.)

But, why Naive Bayes classifiers?

We do have other alternatives when coping with NLP problems, such as Support Vector Machine (SVM) and neural networks. However, the simple design of Naive Bayes classifiers makes them very attractive for such classifiers. Moreover, they have been demonstrated to be fast, reliable and accurate in a number of applications of NLP.

MNB(Multinomial Naive Bayes) is stronger for snippets than for longer documents. While (Ng and Jordan, 2002) showed that NB(Naive Bayes) is better than SVM/logistic regression (LR) with few training cases, MNB is also better with short documents. SVM usually beats NB when it has more than 30–50 training cases, we show that MNB is still better on snippets even with relatively large training sets (9k cases).

How does it work?

In order to understand how Naive Bayes classifiers work, I have to briefly recapitulate the concept of Bayes’ rule. The probability model that was formulated by Thomas Bayes (1701-1761) is quite simple yet powerful; it can be written down in simple words as follows:

Posterior Probability = (Conditional Probability * Prior Probability) / Evidence

Bayes theorem forms the core of the whole concept of Naive Bayes classification. The posterior probability, in the context of a classification problem, can be interpreted as: “What is the probability that a particular object belongs to a class given its observed feature values?”

A more concrete example would be: “What is the probability that a person has diabetes given a certain value for a pre-breakfast blood glucose measurement and a certain value for a post-breakfast blood glucose measurement?”

P (diabetes ∣ xi), xi = [ 90mg / dl, 145mg / dl]

Let

l> xi be the feature vector of sample i, i ∈ {1, 2,….,n}

l> ωj be the notation of class j, j ∈ {1, 2,…,m}

l> and P(xi ∣ ωj) be the probability of observing sample xi given that is belongs to class

ωj.

The general notation of the posterior probability can be written as

P(ωj ∣ xi) = P(xi ∣ ωj) ⋅ P(ωj)

P(xi)

The objective function in the naive Bayes probability is to maximize the posterior probability given the training data in order to formulate the decision rule.

To continue with our example above, we can formulate the decision rule based on the posterior probabilities as follows:

A person has diabetes if : –

P(diabetes ∣ xi) ≥ P(not-diabetes ∣ xi)

else classify a person as healthy.

Drawbacks of Naïve Bayes’ Classifier

- The first disadvantage is that the Naive Bayes classifier makes a very strong assumption on the shape of your data distribution, i.e. any two features are independent given the output class. Due to this, the result can be vague – hence, a “naive” classifier. This is not as terrible as people generally think, because the NB classifier can be optimal even if the assumption is violated.

- Another problem happens due to data scarcity. For any possible value of a feature, you need to estimate a likelihood value by a frequentist approach. This can result in probabilities going towards 0 or 1, which in turn leads to numerical instabilities and worse results. In this case, you need to smooth in some way your probabilities (e.g. as in sklearn), or to impose some prior on your data, however, you may argue that the resulting classifier is not naive anymore.

- A third problem arises for continuous features. It is common to use a binning procedure to make them discrete, but if you are not careful you can throw away a lot of information.